Gemini 2.0 Flash Now Available, Introducing Flash-Lite & Pro Models

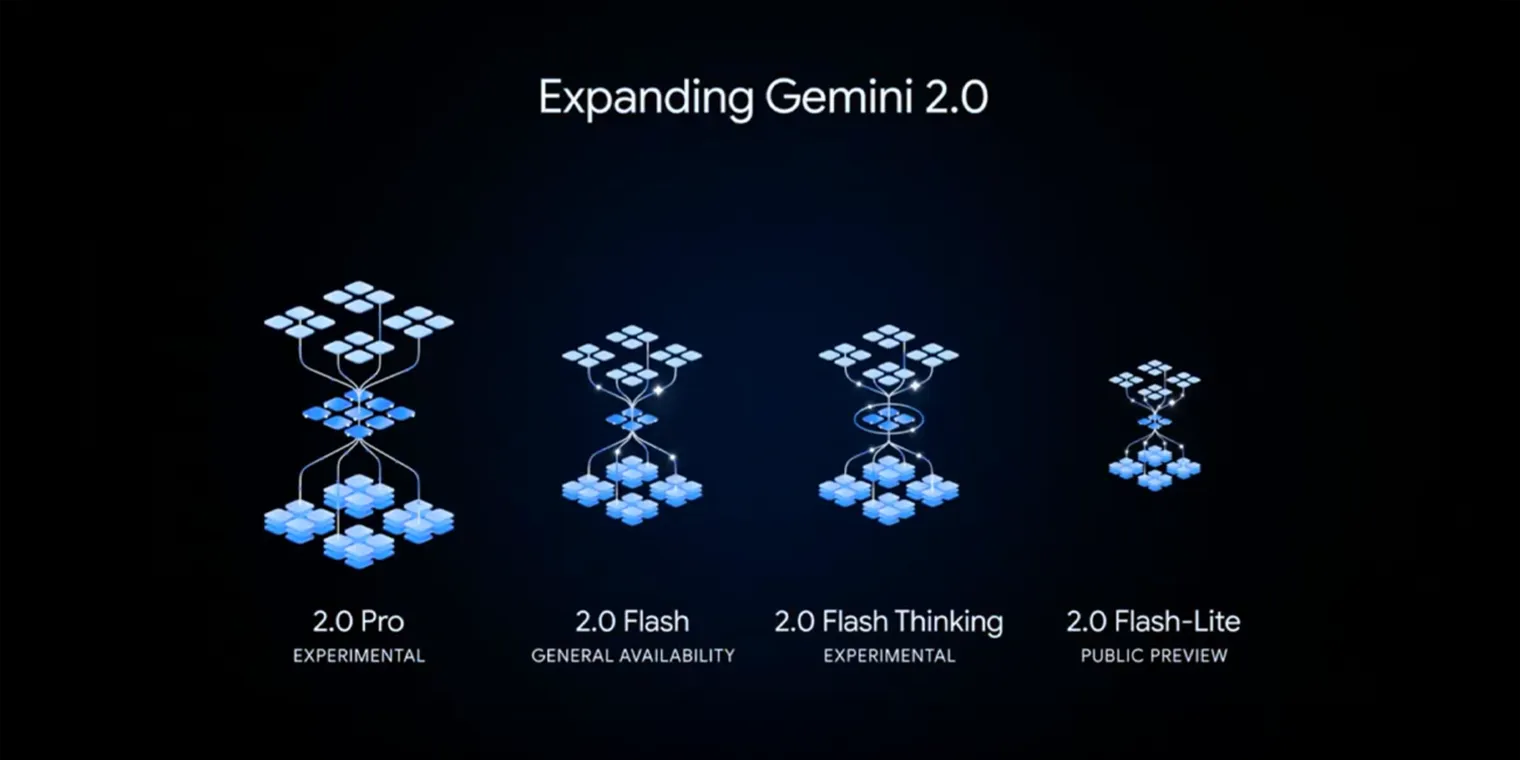

Google has expanded its AI lineup with new Gemini 2.0 models, following the stable release of Gemini 2.0 Flash, which is now widely available through the Gemini API in Google AI Studio and Vertex AI. These latest models focus on improving both performance and affordability, aiming to compete with alternatives from OpenAI and DeepSeek.

One of the new additions is Gemini 2.0 Flash-Lite, a streamlined version of the Gemini model that outperforms its predecessor, 1.5 Flash, while remaining fast and cost-efficient. It comes with a 1 million token context window and supports multimodal input, making it ideal for large-scale tasks like image captioning. Flash-Lite is currently in public preview via Google AI Studio and Vertex AI.

Another exciting update is Gemini 2.0 Flash Thinking Experimental, now available for free to all Gemini users. This model integrates with YouTube, Google Maps, and Google Search, providing real-time data. It also explains its reasoning, offering insights into how it generates responses—similar to DeepSeek-R1 or OpenAI’s 3o mini.

Google has also introduced Gemini 2.0 Pro Experimental, built for advanced coding and complex prompts, featuring a 2 million token context window. This model is available for developers through Google AI Studio and Vertex AI, and for Gemini Advanced users via the Gemini app.

Reference: Google Blog - Gemini 2.0 is now available to everyone